As organizations increasingly adopt container orchestration solutions like Kubernetes to manage and deploy applications, the need for robust security practices becomes paramount. In collaboration with Panther Labs, we delve into the intricate world of Kubernetes security, starting with the fundamentals of the Kubernetes API Audit Log and its crucial role in identifying and mitigating potential threats. Our collaborative effort extends to discussing strategies for hunting and building detections addressing key threat tactics including Initial Access, Privilege Escalation, Defense Evasion and Discovery. For each of these threats, we walk you through practical examples including what the log would look like and exactly how to build the detection.

The blog is rather technical, and if you want to skip the reading and just get the detections, here is a link to the detection pack published in the Panther repository.

Before we get started, I’d like to express my gratitude to the incredible team at Panther Labs specifically, Nicholas Hakmiller and Ariel Ropek for making all of this possible. A special thanks also goes out to our Snowflake Incident Response team, Alex Windle and Devin Kuttiamkonath, for their invaluable assistance in getting these solutions deployed to our environment, complete with response books. Lastly, my amazing manager, Haider Dost, for his incredible thought leadership and willingness to give back to security.

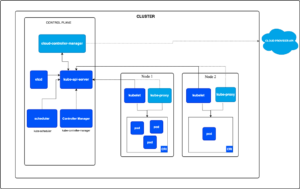

Kubernetes, also known as K8s, is an open source system for automating deployment, scaling and management of containerized applications. Kubernetes enjoys widespread popularity, with numerous cloud providers offering managed K8s platforms, while others host their own clusters on-premises. Regardless of the deployment model, the control plane serves as the central component of the platform, with the API Server at its core. The Kubernetes API enables you to query and manage the state of objects within the cluster, such as nodes, pods, containers, and events, and is also a prime target for malicious attackers.

All Roads Lead back to the API Server [https://kubernetes.io/docs/concepts/architecture/]

The Kubernetes Audit log is a record of all activities and interactions that occur within a K8s cluster via the API Server and is a detection engineer’s best friend.

Per the official documentation, the audit log provides the following information:

What happened?

When did it happen?

Who initiated it?

On what did it happen?

Where was it observed?

From where was it initiated?

To where was it going?

We will be leveraging the event log which captures all the information that can be included in the API Audit Log. No matter what deployment model you follow, the Kubernetes api log will mostly follow this schema (GKE being the black sheep, but can be mapped back to this form).

| Field | Description |

| kind | Event |

| level | Audit Level at which the event was generated |

| auditID | Unique Audit ID generated for each request |

| stage | Stage of the request handling when this event instances was generated |

| requestURI | RequestURI is the request URI as sent by the client to a server |

| verb | Verb is the Kubernetes verb associated with the request. For non-resource requests this is the lower-cased HTTP method |

| user | Authenticated user information |

| impersonatedUser | Impersonated user information |

sourceIPs | Source IPs, from where the request originated and intermediate proxies. The source IPs are listed from (in order): X-Forwarded-For request header IPsX-Real-Ip header, if not present in the X-Forwarded-For listThe remote address for the connection, if it doesn’t match the last IP in the list up to here (X-Forwarded-For or X-Real-Ip). Note: All but the last IP can be arbitrarily set by the client. |

| userAgent | UserAgent records the user agent string reported by the client. Note that the UserAgent is provided by the client, and must not be trusted. |

| objectRef | Object reference this request is targeted at. Does not apply for List-type requests, or non-resource requests. |

| responseStatus | The response status, populated even when the ResponseObject is not a Status type. For successful responses, this will only include the Code and StatusSuccess. For non-status type error responses, this will be auto-populated with the error Message. |

| requestObject | API object from the request, in JSON format. The RequestObject is recorded as-is in the request (possibly re-encoded as JSON), prior to version conversion, defaulting, admission or merging. It is an external versioned object type, and may not be a valid object on its own. Omitted for non-resource requests. Only logged at Request Level and higher. |

| responseObject | API object returned in the response, in JSON. The ResponseObject is recorded after conversion to the external type, and serialized as JSON. Omitted for non-resource requests. Only logged at Response Level. |

| requestReceivedTimestamp | Time the request reached the apiserver. |

| stageTimestamp | Time the request reached the current audit stage. |

| annotation | Annotations is an unstructured key value map stored with an audit event that may be set by plugins invoked in the request serving chain, including authentication, authorization and admission plugins. Note that these annotations are for the audit event, and do not correspond to the metadata.annotations of the submitted object. Keys should uniquely identify the informing component to avoid name collisions (e.g. podsecuritypolicy.admission.k8s.io/policy). Values should be short. Annotations are included in the Metadata level. |

Here is an example of a Kubernetes log, which helps show all these fields and how they interact with each other and present important information for detection and investigation:

{

"kind": "Event",

"apiVersion": "audit.k8s.io/v1",

"level": "Metadata",

"auditID": "abcd1234-5678-90ef-ghij-klmnopqrstuv",

"stage": "ResponseComplete",

"requestURI": "/api/v1/namespaces/default/pods",

"verb": "create",

"user": {

"username": "admin",

"groups": [

"system:masters",

"developers"

],

"extra": {

"impersonated_user": "john.doe" #Impersonated User acting on behalf of Username

}

},

"sourceIPs": [

"192.168.1.100", #X-Forwarded-For-IP

"10.0.0.5", #X-Real-IP

"172.16.0.8" #Remote-IP

],

"userAgent": "kubectl/v1.22.1 (linux/amd64) kubernetes/abc123",

"objectRef": {

"resource": "pods",

"namespace": "default",

"name": "nginx-deployment-7db9fccd9b-abcde",

"subresource": "status",

"apiVersion": "v1"

},

"responseStatus": {

"metadata": {},

"code": 201

},

"requestReceivedTimestamp": "2023-11-22T10:30:00Z",

"stageTimestamp": "2023-11-22T10:30:05Z",

"annotations": {

"authorization.k8s.io/decision": "allow",

"authorization.k8s.io/reason": "RBAC: allowed by role binding"

},

"requestObject": {

"kind": "Pod",

"apiVersion": "v1",

"metadata": {

"name": "nginx-deployment-7db9fccd9b-abcde",

"namespace": "default"

},

"spec": {

"containers": [

{

"name": "nginx",

"image": "nginx:1.18"

}

]

}

},

"responseObject": {

"kind": "Pod",

"apiVersion": "v1",

"metadata": {

"name": "nginx-deployment-7db9fccd9b-abcde",

"namespace": "default",

"creationTimestamp": "2023-11-22T10:30:05Z"

},

"spec": {

"containers": [

{

"name": "nginx",

"image": "nginx:1.18"

}

]

}

}

}

Code language: JSON / JSON with Comments (json)When approaching a new platform, Snowflake Threat Detection takes a comprehensive strategy in determining the primary locations for implementing detection measures. Our ultimate goal is to cover all stages of the kill chain and maximize our return on investment. However, the earlier we can detect an attack the better chance we have at minimizing the impact.

Threat: IOC Activity in the K8 Control Plane

When examining the Kubernetes audit log fields or any audit log for that matter, specifically those fields that might reveal the source IP address of actions, there’s a low hanging fruit opportunity for detecting initial access. This involves identifying IP addresses making requests in the cluster that are known to be indicators of compromise or attack.

How to:

The “SourceIPs” field provides an opportunity to identify potentially suspicious activity by cross-referencing against a list of known indicators of compromise (IOC). Within this field, you’ll find an array of IP addresses which can be used to match against a source of known indicators. The position of each IP address in the array is indicative of the type of address in use, further aiding in detection.

Example Log:

"sourceIPs": [

"192.168.1.100",

"10.0.0.5",

"172.16.0.2"

]

Code language: Python (python)Query snippet:

SELECT *,

VALUE as SRC_IP, --Value is a result of a lateral flatten of an array

SOURCEIPS as IP_ADDRESS,

CASE WHEN INDEX = 0 THEN 'X_Forwarded_For'

WHEN INDEX = 1 THEN 'X_Real_Ip'

WHEN INDEX = 2 THEN 'Remote_Address'

ELSE 'Unknown'

END AS IP_TYPE

FROM panther_logs.public.kubernetes_control_plane, lateral flatten(source_ips)

INNER JOIN iocs

ON value = iocs.ioc_value

WHERE iocs.ioc_type = 'ip'

Code language: SQL (Structured Query Language) (sql)Threat: Unauthenticated/Anonymous Kubernetes API Request

While this is largely a misconfiguration, I thought it was severe enough to include in this blog. By default, a kubernetes cluster will accept API calls from anonymous users, meaning that a user does not need to provide any token/password in the request and the API server will still accept and process the request. This would allow unfederated access to a cluster and no traceability to a user/service.

How to:

The primary field within the k8s audit log is the “User” field, which provides authentication information. In these specific scenarios, the API Server will record the unauthenticated user with a username of system:anonymous and the group of system:unauthenticated. If you observe this happen regularly, it is not cause for immediate concern; this user/group is employed for accessing the cluster’s health endpoints including readyz, livez, healthz. However, any requests outside of these endpoints may be a red flag.

Example Log:

"User": {

"username": "system:anonymous",

"groups": ["system:unauthenticated"]

}

Code language: Python (python)Query Snippet:

SELECT *

FROM panther_logs.public.kubernetes_control_plane

WHERE USER:username = 'system:anonymous'

AND USER:groups = 'system:unauthenticated"

--health endpoint in k8s cluster, these are common anonymous requests and expected behavior

AND REQUEST_URI NOT ilike '%/readyz%'

AND REQUEST_URI NOT ilike '%/livez%'

AND REQUEST_URI NOT ilike '%/healthz%'

Code language: SQL (Structured Query Language) (sql)Mitigation:

This can be mitigated by passing the flag -anonymous-auth = false during cluster set up.

Threat: Unauthorized Kubernetes Pod Execution

After a cluster has been compromised, our objective is to monitor for any unauthorized code execution within the pods. In a production environment, executing code is typically not encouraged, with the exception of designated debugging use cases or namespaces.

How to:

For this particular detection, we can focus on another key field in the audit log, ‘objectRef’. This field displays the request and its intended target. Pod execution will target pods, and highlights the subresource ‘exec’ within this field. Moreover we are also able to see the particular command that was run by this user in the ‘requestURI’ field. This information can serve as a valuable tool for establishing a baseline of expected behavior and identifying any unusual or suspicious commands executed, especially when such activities are common in a production environment.

Example Log:

'SELECT *,

impersonated_user as src_user,

SPLIT(REQUEST_URI,'exec?')[1] as command_executed,

FROM panther_logs.public.kubernetes_control_plane

WHERE

OBJECT_REF:resource = 'pods'

AND OBJECT_REF:subresource = 'exec'

--insert allow-list below for example namespaces where kubectl exec is expected for debugging or log gathering

--AND NOT OBJECT_REF:namespace = 'debugging-namespace'

LIMIT 10'

Code language: SQL (Structured Query Language) (sql)Threat: Unauthorized Kubernetes Pod Attachment

Similar to Pod Execution, another form of execution is pod attachment. Although similar, pod attachment has slightly broader capabilities and can be used to retrieve logs, open interactive shell sessions, forwarding ports or running pod debugging sessions.

How to:

This is largely the same as pod execution. However, the key differentiator is that instead of monitoring for exec subresource, attachment focuses on the ‘attach’ subresource.

Example Log:

"objectRef": {

"apiVersion": "v1",

"name": "pod-name-here",

"namespace": "debugging-namespace",

"resource": "pods",

"subresource": "attach"

}

Code language: Python (python)Query Snippet:

'SELECT *,

impersonated_user as src_user,

SPLIT(REQUEST_URI,'exec?')[1] as command_executed,

FROM panther_logs.public.kubernetes_control_plane

WHERE

OBJECT_REF:resource = 'pods'

AND OBJECT_REF:subresource = 'attach'

--insert allow-list below for example namespaces where kubectl attach is expected for debugging or log gathering

AND OBJECT_REF:namespace = 'debugging-namespace'

LIMIT 10'

Code language: SQL (Structured Query Language) (sql)Persistence:

Threat: Kubernetes Service with Type Node Port Created

Following a cluster breach and successful code execution, attackers frequently try to establish means of maintaining access. The creation of a new Kubernetes service with type Node Port can be problematic and potentially indicate malicious intent. A Node Port service allows an attacker to expose a set of pods hosting the service to the internet by opening their port and redirecting traffic here. This can be used to bypass network controls and intercept traffic, creating a direct line to the outside network.

How to:

For this particular detection, we want to focus on the ‘verb’ field which indicates the type of request submitted, ‘objectRef’ when the target resource is a service and the actual requestObject submitted where we look for the type of object to be NodePort. The requestObject also has very important information related to the service being created such as networking configurations, ports, and protocols, which can be used to understand intent.

Example Log:

"objectRef": {

"apiVersion": "v1",

"name": "pod-name-here",

"namespace": "default",

"resource": "services",

},

"requestObject": {

"apiVersion": "v1",

"kind": "Service",

"metadata": {

"name": "my-nodeport-service",

"namespace": "default"

},

"spec": {

"selector": {

"app": "my-app"

},

"ports": [

{

"protocol": "TCP",

"port": 80,

"targetPort": 80

}

],

"type": "NodePort"

}

}

Code language: Python (python)Query Snippet:

SELECT *,

OBJECT_REF:name as service,

OBJECT_REF:namespace as namespace,

OBJECT_REF:resource as resource_type,

COALESCE(impersonated_user, USER:username) as src_user,

USER_AGENT,

RESPONSE_OBJECT:spec:externalTrafficPolicy as external_traffic_policy,

RESPONSE_OBJECT:spec:internalTrafficPolicy as internal_traffic_policy,

RESPONSE_OBJECT:spec:clusterIP as cluster_ip_address,

VALUE:port as port, --port where traffic gets forwarded to in the pod

VALUE:protocol as protocol, --protocol the service uses

VALUE:nodePort as node_port, --which port acts as the nodeport on all the nodes

REQUEST_OBJECT:spec:type as type,

IFF(REQUEST_OBJECT:spec:status:loadBalancer is null, 'No LB Present',

REQUEST_OBJECT:spec:status:loadBalancer) as load_balancer,

RESPONSE_STATUS:code as response_status

FROM panther_logs.public.kubernetes_control_plane, lateral flatten(response_object:spec:ports)

WHERE

OBJECT_REF:resource = 'services'

AND VERB = 'create'

AND REQUEST_OBJECT:spec:type = 'NodePort'

--insert allow-list for expected NodePort Services

Code language: SQL (Structured Query Language) (sql)Threat: Kubernetes Cron Job Created or Modified

Similar to standalone hosts, attackers often establish persistence in a Kubernetes cluster by creating or modifying cron jobs. Cron jobs enable them to execute predefined tasks on a recurring schedule.

How to:

In this context, our focus is on the ‘verb’ field, specifically looking for ‘create’ and ‘update’ events. These events are of particular interest when the target object, ObjectRef, pertains to cron jobs.

Example Log:

"objectRef": {

"resource": "cronjobs",

"name": "my-cronjob",

"namespace": "default"

}

Code language: Python (python)Query Snippet:

SELECT *,

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB IN ('create', 'update')

AND OBJECT_REF:resource = 'cronjobs'

--insert allow-list for expected cronjobs in a cluster, for example a sync service

Code language: SQL (Structured Query Language) (sql)Threat: New DaemonSet Deployed to Kubernetes Cluster

Another technique for maintaining persistence, although not exclusive to this type of attack, involves the deployment of a daemonset within a Kubernetes cluster. A DaemonSet is a workload that guarantees the presence of precisely one instance of a specific pod on every node in the cluster. Whenever a new node is introduced, an associated pod is automatically generated and distributed, offering an ideal avenue for persistent access.

How to:

Much like other methods of attack involving the creation of resources, the act of deploying a new daemonset focuses primarily on the target resource ‘daemonset’

Example Log:

"objectRef": {

"resource": "daemonsets",

"name": "my-daemonset",

"namespace": "default",

"uid": "12345678-1234-5678-1234-567812345678"

}

Code language: Python (python)Query Snippet:

SELECT *,

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB = 'create'

AND OBJECT_REF:resource = daemonsets

--insert allow-list for know daemonsets running kubernetes clusters i.e a security agent

Code language: SQL (Structured Query Language) (sql)Threat: Pod Creation or Modification to a Node Host Path Volume Mount

Following code execution and or successful persistence implant, a malicious attacker will look to escalate privileges within the cluster. One of the more common attack paths is container breakout when an attacker gains unauthorized access to the underlying host system from within a containerized environment. The creation or modification of a pod to mount to the underlying host’s volume can pose significant risk and potentially allow for privilege escalation through underlying vulnerabilities. In addition this can open up the possibilities for data exfiltration or unauthorized file access. In general, we only want to alert on host volumes that can lead to privilege escalation, here a few examples:

How to:

For this particular detection, we want to focus on the ‘verb’ field to look for any creation or modification of a target object, pods. When a pod is created or modified, we also need to look into the requestObject to identify if there is a suspicious host volume mounted to the container.

Example Log:

"objectRef": {

"apiVersion": "v1",

"name": "pod-name-here",

"namespace": "kube-system",

"resource": "pods",

},

"requestObject": {

"apiVersion": "v1",

"kind": "Pod",

"metadata": {

"name": "kubelet-mount-pod",

"namespace": "default"

},

"spec": {

"containers": [

{

"name": "kubelet-mount-container",

"image": "nginx:latest"

}

],

"volumes": [

{

"name": "kubelet-data",

"hostPath": {

"path": "/var/lib/kubelet/pki",

"type": "Directory"

}

}

]

}

}

Code language: Python (python)Query Snippet:

SELECT *

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB IN ('create', 'update', 'patch')

AND OBJECT_REF:resource = 'pods'

AND request_object:spec:volumes[0]:hostPath:path ilike ANY (/var/run/docker.sock','/var/run/crio/crio.sock','/var/lib/kubelet','/var/lib/kubelet/pki','/var/lib/docker/overlay2','/etc/kubernetes','/etc/kubernetes/manifests','/etc/kubernetes/pki','/home/admin')

--insert allow-list for expected workloads that require a sensitive mount

Code language: SQL (Structured Query Language) (sql)Threat: Pod Created with attachment to the Node Host Network

Similar to the above, attackers will want to look at other ways to gain privilege escalation. Another method would be the creation of a pod to the node’s host network. This would allow an attacker to listen to all network traffic on the particular node and other compute on the network namespace. Attackers could potentially use this to capture secrets passed in arguments or connections to escalate their privileges, reducing isolation.

How to:

Similar to a lot of other pod creation detection, we want to focus on the ‘Create’ verb of our pod target objects. When this creation occurs, the requestObject field identifies as a boolean if the pod is attached to the host network or not, hostNetwork.

Example Log:

"objectRef": {

"resource": "pods",

"namespace": "default",

"name": "my-pod"

},

"responseStatus": {

"code": 200

},

"requestObject": {

"apiVersion": "v1",

"kind": "Pod",

"metadata": {

"name": "my-pod",

"namespace": "default"

},

"spec": {

"hostNetwork": true,

// Other pod specifications

}

}

}

Code language: Python (python)Query Snippet:

SELECT *

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB = ‘create’

AND OBJECT_REF:resource = ‘pods’

AND REQUEST_OBJECT:spec:hostNetwork = True

–insert allow-list for expected pods that are attached to the node’s network

Threat: Privileged Pod Created

Another common method for privilege escalation, is the deployment of a privileged pod or running a pod as a root. These particular pods have full access to the host’s namespace and devices, have the ability to exploit the kernel, have dangerous linux capabilities, and can be a powerful launching point for further attacks. In the event of a successful container escape where a user is operating with root privileges, the attacker retains this role on the node.

How to:

Similar to a lot of other pod creation detection, we want to focus on the ‘Create’ verb of the target object, pods. During this creation event, the ‘requestObject’ field is essential in determining whether the pod is running with privileged status or as a Root user, marked by a boolean value.

Example Log:

"requestObject": {

"apiVersion": "v1",

"kind": "Pod",

"metadata": {

"name": "my-pod",

"namespace": "default"

},

"spec": {

"containers": [

{

"name": "my-container",

"image": "nginx:latest",

"securityContext": {

"privileged": true,

"runAsNonRoot": false

}

}

]

}

}

}

Code language: Python (python)Query Snippet:

SELECT *

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB = 'create'

AND OBJECT_REF:resource = 'pods'

AND (REQUEST_OBJECT:spec:containers[0]:securityContext:privileged = 'true' OR REQUEST_OBJECT:spec:securityContext:runAsNonRoot = 'false')

--insert allow-list for pods that are expected to run as privileged workloads or as root

Code language: SQL (Structured Query Language) (sql)Threat: Pod Created with Overly Permissive Linux Capabilities

Keying into the above, excessive permissions can be a launch point for privilege escalation or container breakout. However, runAsNonRoot or Privileged do not need to be true in order to have excessive linux capabilities. Attackers may create non-privileged pods however add specific linux capabilities which emulate privileged access. Here are a few examples,

How to:

Similar to a lot of other pod creation detection, we want to focus on the ‘Create’ verb of our pod target objects. When this creation occurs, the requestObject field also includes a field called capabilities added, which is where linux capabilities can be added as an array. This specific array will be where we want to see an overlap of what to monitor and what is added to the specific container.

Example Log:

"requestObject": {

"kind": "Pod",

"namespace": "default",

"name": "my-pod",

"uid": "12345678-1234-5678-1234-567812345678",

"apiVersion": "v1",

"spec": {

"containers": [

{

"name": "container-1",

"securityContext": {

"capabilities": {

"add": ["BPF"]

}

}

}

Code language: Python (python)Query Snippet:

SELECT *,

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB IN = 'create'

AND OBJECT_REF:resource = 'pods'

AND ARRAY_INTERSECTION(REQUEST_OBJECT:spec:containers[0]:securityContext:capabilities:add, ARRAY_CONSTRUCT('BPF','NET_ADMIN','SYS_ADMIN')) != [] --linux capabilities overlap

AND REQUEST_OBJECT:spec:containers[0]:securityContext is not null

--insert allow-list for pods that are expected to have privileged linux capabilities, for example a observability agent

Code language: SQL (Structured Query Language) (sql)Threat: Pod Created in the Host IPC or Host PID Namespace

Another common attack technique to potentially escalate privileges and break out of isolation is deploying pods in the Host PID Namespace or the Host IPC Namespace. Deploying pods in the Host IPC Namespace, breaks isolation between the pod and the underlying host meaning the pod has direct access to the same IPC objects and communications channels as the host system. Similarly, the Host PID namespace enables a pod and its containers to have direct access and share the same view as of the host’s processes. Both of these can offer a powerful escape hatch to the underlying host.

How to:

Much like many other methods for detecting pod creation, our primary focus is on the ‘Create’ verb applied to our target pod objects. During pod creation, our monitoring extends to identifying specific specifications within the ‘requestObject’, ‘hostPID’ and ‘hostIPC.’

Example Log:

"requestObject": {

"kind": "Pod",

"namespace": "default",

"name": "my-pod",

"uid": "12345678-1234-5678-1234-567812345678",

"apiVersion": "v1",

"spec": {

...

"enableServiceLinks": true,

"hostIPC": true,

"hostNetwork": true,

"hostPID": true,

}

Code language: YAML (yaml)Query Snippet:

SELECT *,

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB IN = 'create'

AND OBJECT_REF:resource = 'pods'

AND (REQUEST_OBJECT:spec:hostPID = True OR REQUEST_OBJECT:spec:hostIPC = True)

--insert allow-list for pods that are expected to be deployed in Host PID or IPC

Code language: SQL (Structured Query Language) (sql)Threat: Pod Created in Pre-Configured or Default Namespace

Following a cluster breach and successful code execution, attackers will often try to hide their presence or evade defenses. One way to do this, although not very sneaky, would be to create a pod in a pre-configured namespace such as default, kube-system, kube-public. In general only administrators should be creating pods in the kube-system namespace, and it is typically not best practice to run any cluster critical infrastructure here. The kube-public namespace is intended to be readable by unauthenticated users and the default namespace is shipped with the cluster and should not be used in production. Although these namespaces do not explicitly evade defenses, it can be an opportunity to hide in plain sight and would be anomalous to see activity here.

How to:

For this specific detection method, our primary focus once more is on the ‘verb’ field, with a specific emphasis on identifying ‘create’ requests. Additionally, we look at the ‘objectRef’ field to ensure that the target object is indeed a pod being created within a preconfigured namespace, as mentioned above.

Example Log:

"objectRef": {

"apiVersion": "v1",

"name": "pod-name-here",

"namespace": "kube-system",

"resource": "pods",

},

"requestObject": {

"apiVersion": "v1",

"kind": "Pod",

"metadata": {

"name": "my-pod",

"namespace": "kube-system"

},

"spec": {

"containers": [

{

"name": "my-container",

"image": "nginx:latest"

}

]

}

}

Code language: Python (python)Query Snippet:

SELECT *

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB = 'create'

AND OBJECT_REF:resource = 'pods'

AND REQUEST_OBJECT:kind = 'pod'

AND VERB = 'create'

AND OBJECT_REF:namespace IN ('kube-system','kube-public','default')

--insert allow-list for known workloads that are not sensitive or need to run in these namespaces

Code language: SQL (Structured Query Language) (sql)Threat: New Admission Controller Created

Once an attacker has gained a foothold in a cluster and potentially figured out how to escalate privileges, malicious attackers may try to discover resources and understand how your cluster operates. While not directly related to discovery, the deployment of a new admissions controller allows an attacker to intercept all api requests made within a cluster allowing for enumeration of resources and common actions within a cluster. This can be a very powerful tool while understanding where to pivot to next.

How to:

This detection strategy centers around two key elements: first, we examine the ‘verb’ field, specifically focusing on all ‘create’ events; and second, we identify the target object being created is related to an admission controller.

Example Log:

"objectRef": {

"resource": "mutatingwebhookconfigurations",

"name": "my-mutating-webhook-config",

"namespace": "default",

"apiVersion": "admissionregistration.k8s.io/v1",

"uid": "12345678-1234-5678-1234-567812345678"

}

Code language: Python (python)Query Snippet:

SELECT *

FROM panther_logs.public.kubernetes_control_plane

WHERE

VERB ='create'

AND OBJECT_REF:resource IN ('mutatingwebhookconfigurations', 'validatingwebhookconfiguration')

--insert allow-list for known admission controllers such as gatekeeper/OPA

Code language: SQL (Structured Query Language) (sql)Threat: Secret Enumeration by a User

Traditional discovery tactics also apply to Kubernetes clusters, with one significant tactic being secret enumeration. Attackers frequently attempt to identify and list all secrets stored within a cluster’s secret store. These secrets can encompass a wide range of sensitive information, such as API tokens, passwords, or other confidential data. Access to such secrets can potentially enable lateral or vertical movement and unauthorized access to critical resources. This approach can also be extended to but not limited to user enumeration, resource enumeration, or policy enumeration.

How to:

This detection focuses on a particular user trying to list, get or watch a large number of secrets in a short period of time. These secret references are typically located within the object reference field.

Example Log:

"objectRef": {

"resource": "secrets",

"name": "my-secret",

"namespace": "my-namespace",

"apiVersion": "v1",

"uid": "12345678-1234-5678-1234-567812345678"

}

Code language: Python (python)Query Snippet:

SELECT

USER_AGENT,

USER:USERNAME,

ARRAY_UNIQUE_AGG(OBJECT_REF:name) as secrets_enumerated,

ARRAY_SIZE(ARRAY_UNIQUE_AGG(OBJECT_REF:name)) as total_secrets,

ARRAY_UNIQUE_AGG(RESPONSE_STATUS:code) as status,

count(*) as total_request

FROM panther_logs.public.kubernetes_control_plane

VERB IN ('list','get','watch')

AND OBJECT_REF:resource = 'secrets'

--insert allow-list here

AND EVENT_TIME >= DATEADD(minute, -15, current_timestamp())

GROUP BY

USER_AGENT,

USER:USERNAME

HAVING

total_secrets >=15 --This is on an environment by environment basis and should be tuned to your deployment

LIMIT 10

Code language: SQL (Structured Query Language) (sql)Although the primary purpose of this blog was to present actionable detectons in Kubernetes; what good is a detection if you can’t effectively respond to it? Detection triage and response is not cookie cutter, however, we are going to try to present a general response outline you can follow to get started and modify as you work through your Kubernetes detection triage.

Scoping:

Analysis:

Containment and Remediation:

Recovery:

Lessons Learned:

Although this is not a comprehensive list of everything that could happen in the Kubernetes platform it should give you a running start in securing your Kubernetes deployments. Lastly, I want to express my gratitude to our partners at Panther Labs once again for making all of this possible and publishing our detections to their platform here.