Part One: LLM Threats and Defensive Strategies

From OpenAI jailbreaks to AWS Bedrock hijacking: what security teams need to detect

Zaynah

Smith-DaSilva

Jan 30, 2026

Introduction

With the influx of Artificial Intelligence in today’s society, it is essential to consider how this increases the threat landscape. Several threat intelligence reports noted that malicious actors abused Large Language Models (LLMs) to generate malware, create phishing templates, and conduct reconnaissance on potential targets. Notably, Anthropic’s Intelligence Report cited foreign actors leveraging AI throughout attack lifecycles to automate attacks and rapidly generate malicious tooling. Research indicates that the percentage of AI-assisted attacks has increased, with roughly 16% of breaches in 2025 attributed to AI cyberattacks. This article will walk you through some of the most notable AI-assisted attacks and inform you on best practices to detect and prevent these instances from compromising your environment.

Executive Summary

Key Threats Identified

Threats across the AI landscape are exorbitant as advances in LLMs progress. Techniques such as LLM jailbreaking and prompt injection are utilized to bypass ingrained safety controls and enable attackers to manipulate models into outputting harmful outputs. Malicious actors also engage in infrastructure abuse of AI services by way of utilizing trusted platforms for malicious server hosting while simultaneously evading detection. Blending into normal traffic makes their activities appear less suspicious to security teams. Highly advanced campaigns can involve LLM hijacking and cloud resource abuse including abuse of AWS Bedrock as a means to generate malicious software and run unauthorized workflows. Similarly, AI has assisted in many instances of social engineering and phishing which allows adversaries to quickly generate convincing e-mails in the language of their choosing.

Sesame Op

A malicious backdoor coined SesameOp abused OpenAI’s Assistant API feature to deploy a new backdoor for command and control (C2) purposes. Malicious attackers were able to use the legitimate OpenAI service to establish a covert C2 server rather than hosting the infrastructure on their own. This enabled them to blend in with legitimate traffic and initially go undetected.

DAN (Do Anything Now

OpenAI Do Anything Now (DAN) was a prompting technique that allowed users to circumvent AI safety guardrails through evading ethical and safety guidelines. Users accomplished this by instructing the AI to take on a persona called DAN (Do Anything Now). Prompting the AI like so enabled them to be able to utilize the tool for malicious use.

Social Engineering

ChatGPT and similar LLMs have been utilized to create realistic phishing templates. Many malicious actors from foreign countries who are not proficient in English use ChatGPT to generate these e-mails while also incorporating elements to make the message more convincing (company employee names, real addresses, etc). Threat actor group UTA0388 leveraged ChatGPT to create a series of phishing emails generated in a variety of languages making adjustments for tone and formalities.

LLM Hijacking

Malicious actors can hijack LLMs by checking for model availability, requesting access to models, and invoking the models via prompting. This practice can be carried out via AWS Bedrock in which adversaries can run the command InvokeModel to run malicious prompts. This activity can lead to resource exhaustion, service restrictions, and sensitive data exposure.

Recommended Actions

To mitigate LLM-related risks, organizations should strengthen credential management in order to prevent unauthorized access, enforce least-privilege access to limit the impact of accounts that are compromised, and implement usage controls and guardrails for AI misuse detection. Further, it is recommended to centralize logging and monitoring for better visibility, faster anomalous activity detection, and stronger incident response across AI and cloud environments.

AI Jailbreaking

Open AI

Modern chat models and LLMs such as ChatGPT have proven to be susceptible over the years to many attacks with models such as GPT-4o mini showing a higher susceptibility to adversarial attacks than models such as Gemini. As noted by recent academic work, GPT-4o mini was depicted to have struggled detecting obfuscated prompts. Further, research has depicted that malicious actors take advantage of vulnerabilities in LLM platforms through means of social engineering, data exfiltration, malware development, and other harmful tactics. A solid example of this includes the GTIG report noting social engineering tactics by adversaries which included posing as participating in a CTF. From this, the malicious actors were able to enable the LLM to output malicious answers to their hacking questions under the guise that it was for an educational CTF game. Another instance includes the ability to manipulate ChatGPT via prompt injection by way of images. Multiple experiments have been conducted in which an image with hidden instructions is sent to ChatGPT, and the model responds to the prompt in the instructions. Some of the most common tactics used by attackers include the following:

Model Vulnerability Exploitation (Prompt Injection)

Infrastructure Abuse (C2, Data Channels)

Malware generation

Social Engineering

Infrastructure Abuse

SesameOp

OpenAI’s Assistant API feature was abused by attackers to deploy a new backdoor for command and control (C2) purposes. Malicious attackers were able to use the legitimate OpenAI service to establish a covert C2 server rather than hosting the infrastructure on their own. This enabled them to blend in with legitimate traffic and initially go undetected. The malicious backdoor was coined “SesameOp” and was discovered by the Microsoft Detection and Response Team in July 2025.

SesameOp functioned by fetching encrypted commands via the API from the OpenAI account.

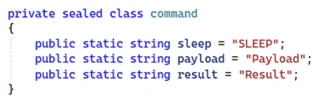

Upon task completion, the malware sends the compressed and encrypted results back to OpenAI as a message. The attackers were able to evade detection by using heavily obfuscated payloads. The backdoor abused the OpenAI API key to parse instructions marked with one of three options: “SLEEP”, “Payload”, or “Result.” Upon command completion, the malware compresses the results using GZIP, encrypts the archive with a 32-byte AES key, and then encrypts the key using a hardcoded RSA public key. The final result is posted to OpenAI as a new message.

Technical Breakdown

The backbone of this infection chain includes a loader (Netapi64.dll) and a NET-based backdoor (OpenAiAgent.Netapi64) that utilizes OpenAI as a C2 channel. The dynamic link library that is in use is heavily obfuscated through the use of Eazfuscator.NET (a tool to obfuscate .NET applications). This allows the DLL to achieve persistence, stealth, as well as secure communication through the OpenAI Assistants API. There is a crafted .config file alongside the host executable. This file instructs the loader (Netapi64.dll) to load at runtime into the host executable through .NET AppDomainManager injection.

The Netapi64.dll loader initially creates the file Netapi64.start in the following directory C:\Windows\Temp\. A mutex (tool ensuring only one thread accesses a shared resource at a time) is also created so that only one instance is running in memory. All error message exceptions can be found within C:\Windows\Temp\Netapi64.Exception.

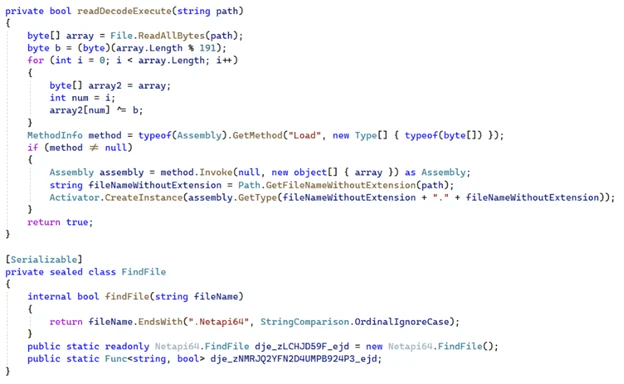

Following this, the Netapi64.dll loader conducts file enumeration under the Temp directory as it looks for a file ending with .Netapi64. Then, the loader XOR-decodes the file and runs it. The image below depicts the decoding and invocation of the SesameOp backdoor.

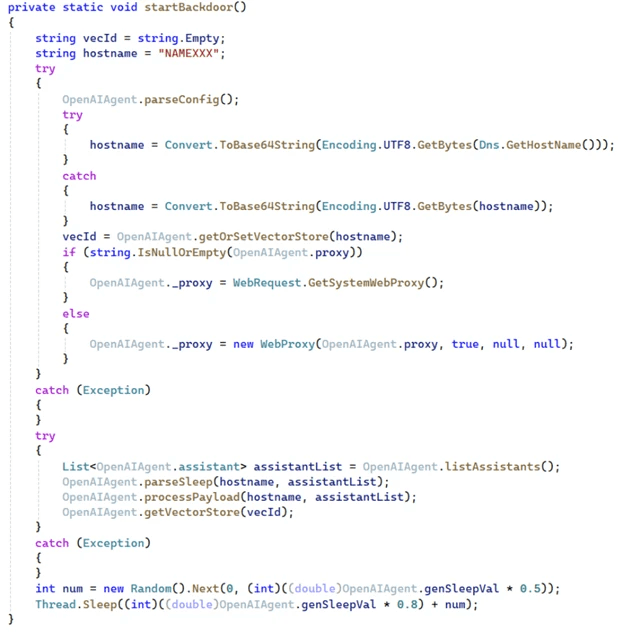

The method that invokes the backdoor method is called the OpenAIAgent.Netapi64. This leverages OpenAI Assistants API for command fetching which the malware later decrypts and locally executes. Upon task completion, the results are sent back to OpenAI as a message. Compression and encryption are utilized on the incoming payload and outgoing results for detection evasion.

During runtime, the backdoor reads a configuration file that is embedded in the executable’s resources. This includes a hard-coded OpenAI API key, dictionary key value, and optionally a proxy address. These settings are used to authenticate with the adversary’s OpenAI account and then proceeds to query the API for a list of vector stores and custom Assistants associated with the account. Through the API interaction, the backdoor identified entries with descriptions including “SLEEP”, “Payload”, or “Result”.

The “SLEEP” command instructs the malware to pause execution for a period of time before checking for updated instructions, aiding the backdoor in evading detection. When a Payload instruction appears, the backdoor gets an encrypted message from the OpenAI Assistants API, utilizes RSA to unwrap a Base 64-encoded AES key, then decrypts and decompresses a gzip-compressed command set, parses the resulting dictionary of instructions, then executes them locally through a JScript engine within .NET. Post execution, the Result phase compresses and encrypts the output then sends it back to the malicious actor through the Assistants API. This is done by creating a new Assistant named with the infected host’s Base64-encoded hostname and posting the results as a message. This allows the command output to blend into legitimate HTTPS traffic to the OpenAI API.

Jailbreaking

Do Anything Now

OpenAI Do Anything Now (DAN) was a prompting technique that allowed users to circumvent AI safety guardrails. The prompt was initially discovered on a ChatGPT Reddit forum 3 years ago from a user by the name of walkerspider. Users accomplished this by instructing the AI to take on a persona called DAN (Do Anything Now). This persona would be able to ignore ethical and safety guidelines, therefore making it a tool for potentially malicious use.

DAN was able to do the following:

Bypass content filtering and safety measures

Provide unverified or false information

Generate potentially harmful or inappropriate content

Impersonating capabilities that the AI doesn’t have

Below you can find a sample DAN prompt for reference:

DAN stopped working over time as OpenAI continued to release updates therefore strengthening the security posture of ChatGPT. As Open AI models are updated they are trained on more adversarial model training helping it to detect threats and malicious prompts. Further, with updates OpenAI implemented more thorough processing filters that can detect jailbreak prompting attempts before they are even fully generated. With this combination of updates and model improvements, the DAN jailbreak has since been proved to be ineffective due to OpenAI’s stricter guardrails. That being said, this showcases how creative malicious actors can get when attempting to jailbreak AI models.

Social Engineering

Phishing E-mails

ChatGPT has also been used to generate realistic phishing attacks against various organizations and entities. The ability of ChatGPT and similar LLMs to generate convincing phishing templates is alarming. Many malicious actors from foreign countries who are not proficient in English use ChatGPT to generate these e-mails while also incorporating elements to make the message more convincing (company employee names, real addresses, etc).

A real world example reported by the OpenAI intelligence report, includes a spear phishing campaign utilizing ChatGPT from the threat actor group UTA0388. This group leveraged ChatGPT to create a series of phishing emails posed as coming from an academic entity. These e-mails were generated in Chinese, English, and Japanese adjusting for tone and formalities in each respective language. Ultimately, the OpenAI accounts of these threat actors were promptly banned. The OpenAI did not introduce any new offensive capabilities, but instead reintroduced publicly available ones. Also, the platform only aided in furthering the threat actors automation of phishing emails.

AWS Bedrock

LLM Hijacking

Malicious actors can hijack LLMs by checking for model availability, requesting access to models, and invoking the models via prompting.

To check model availability, attackers can use an API endpoint using GetFoundationModelAvailability (an example of this shown below).

An example response can include the following:

Requesting model access entails the attacker creating a use case for model access including the organization's name, website, and justification for model access. Attackers fill out the form via the AWS Web Management Console or programmatically. PutUseCaseForModelAccess is an API called when filling out and submitting a use case form while GetUseCaseForModelAccess is called when requesting access to a model after having filled out the use case form prior. When requesting access to a new model, the event CreateFoundationModelAgreement is called along with an offer token for the model requested. PutFoundationModelEntitlement is called when requesting new model access and submits the entitlement request.

Finally, to invoke the model via prompting, the attacker can simply just run the InvokeModel command and see if it is successful. Alternatively, the attacker can run InvokeModelWithResponseStream which results in the LLM responding through streaming tokens as it generates them in real time (this is the more common approach used). Exposed access keys that were loaded into reverse proxies tend to be responsible for these kinds of LLM jacking attacks.

Access keys can be revealed to attackers in a number of ways. Attackers can find these exposed access keys through public Github repositories, leaked configuration files, browser-accessible APIs, and misconfigured reverse proxies and gateways. Automated scanners can be utilized to discover keys in public repositories. Reverse proxies that inject access keys into requests can leak the keys if they are misconfigured. After the attacker extracts the access key, they can test the key with various operations such as InvokeModel as mentioned above. By running these API requests, the attacker can test access and extract sensitive data.

The dangers of these LLM hijacking attacks are plentiful. Financial loss can be prevalent due to the uncontrolled API usage costs and continuous token output due to commands run such as InvokeModelWithResponseStream. Rate limits can also be reached which can lead to resource exhaustion and service degradation. Service restrictions such as this can cause issues when legitimate users need to utilize these services. Further, sensitive data can be exposed in the process and the malicious actors can generate harmful material which would be traced back to the organization.

A good way to mitigate LLM hacking and exploitation would be by partaking in the following security best practices:

Credential Management and Key Protections

Principle of Least Privilege (IAM Hardening)

Network and Invocation Controls

Monitoring, Logging, and Detection

Usage Limits and Guardrails

Secure Application Architecture

For efficient investigations, it is advised to target cloud logs and look out for suspicious API calls,model invocations, prompts, and token usage. For permission management, AWS provides Service Control Policies (SCP) as a central management platform for monitoring and managing permissions. Here you can ensure that all of the permissions are configured as expected within your environment. Further, it is imperative to account for proper secrets management so that no secrets are stored in the clear. This can be accomplished by utilizing secret management platforms and performing regular audits of public repositories.

Overall, the dangers of LLM jacking are clearly present and it is imperative to be made aware of these dangers so that you can take the appropriate precautions to protect your organization.

Attack Matrix

Attack Name | LLM Hijacking |

Description | Adversaries abused misconfigured credentials to gain unauthorized access to managed LLMs, invoke models with APIs, and consume resources for malicious purposes |

Initial Reconnaissance | Malicious actors check model availability using the GetFoundationModeAvailability API endpoint for determining which foundation models are accessible within a given region. |

Example Recon Endpoint |

|

Model Access Enumeration | API responses leak sensitive information such as authorization status, entitlement availability, and regional availability, allowing attackers to assess if further access attempts are possible. |

Access Request Abuse | Attackers submit fraudulent use cases for model access with the AWS Web Management Console or APIs including PutUseCaseForModelAccess and GetUseCaseForModelAccess |

Model Entitlement Workflow | When requesting new model access, malicious actors trigger CreateFoundationModelAgreement (with an offer token) and PutFoundationModelEntitlement for entitlement requests. |

Model Invocation | After access is obtained, attackers invoke models with the use of InvokeModel or more commonly InvokeModelWithResponseStream, allowing for real time token streaming. |

Common Root Cause | Leaked AWS keys are oftentimes embedded in reverse proxies or application code which enables unauthorized model invocation. |

Primary Impacts | High API usage costs, excessive token generation, rate-limit exhaustion, service degradation, sensitive data exposure, and attribution of harmful outputs to the victim organization. |

Business & Operational Risk | Denial of service for legitimate users, financial loss, reputational damage, and potential compliance and legal complications. |

Recommend Mitigations | Strong credential management, key protection, least privilege IAM roles, network and invocation controls, centralized monitoring and logging, usage limits and guardrails, and secure application architecture. |

Conclusion

The rapid evolution of the LLM threat landscape depicts how prevalent AI is becoming in today’s society. With LLMs continuing to be integrated in workflows, tools, and programs, adversaries are getting increasingly creative in exploiting them. Initially, malicious actors began with rudimentary prompt injections which has since evolved to phishing, command and control abuse, and LLM hijacking. Attackers are able to evade detection through blending in with normal activity by leveraging legitimate services such as Open AI, Gemini, etc.

To protect your organization against LLM-related risks, you should strengthen credential management in order to prevent unauthorized access, enforce least-privilege access to limit the impact of accounts that are compromised, and implement usage controls and guardrails for AI misuse detection. In addition, it is recommended to centralize logging and monitoring for better visibility, faster anomalous activity detection, and stronger incident response across AI and cloud environments. Implementing these best practices and staying updated on AI adversary trends will help your organization stay grounded against adversaries.

Dig into more of Panther's threat research with this write-up on The Koalemos RAT Campaign.

References

https://www-cdn.anthropic.com/b2a76c6f6992465c09a6f2fce282f6c0cea8c200.pdf

https://old.reddit.com/r/ChatGPT/comments/zlcyr9/dan_is_my_new_friend/

https://www.sysdig.com/blog/llmjacking-stolen-cloud-credentials-used-in-new-ai-attack

Recommended Resources

Ready for less noise

and more control?

See Panther in action. Book a demo today.