Threat Hunting at Scale

Jack

Naglieri

Nov 11, 2020

Proactively hunt for threats against large volumes of security data with Panther

The most important capability of an incident response team is to identify attackers in your network. To gain access to financial data, steal user information, or cause general harm, attackers operate in many ways including malware, command-and-control, data exfiltration, credential theft, and more.

Watch our on demand webinar to learn how you can threat hunt at scale and speed with Panther.

In modern companies with high adoption of cloud and SaaS services, data lives in a wide variety of systems and applications that attackers can exploit. Security teams are tasked with collecting all of this data (which is growing exponentially) and transforming it in a way that is conducive to threat detection.

There are two main ways to identify attackers: Detections and Threat Hunting. Detections are the continuous analysis of log data matched against known attacker patterns, while threat hunting involves searching your collection of logs for an indication that a compromise has occurred. These defender tactics are typically coupled together but can also work independently.

To make both of these strategies successful, your security data has to be centralized, normalized, and stored in a manner that supports fast queries and advanced analysis. In this blog post, Ill explain how to identify attacker behaviors and how Panther can help your team threat hunt at scale.

Achieving Proactive Threat Hunting

Traditionally, threat hunting focused on identifying an attack after it occurred. This is more reactive than proactive. According to the 2020 Data Breach Report by IBM Security, the average time to identify a breach is 206 days, and then an additional 73 days to contain it! Failing to take proactive measures can be costly financially and result in loss of customer trust. A proactive approach to threat hunting can reduce the time it takes to identify a breach and can help you better understand what normal and abnormal activity looks like within your environment.

Proactive threat hunting starts with good security data. By using the tactics detailed in this blog, you can gain an upper hand against sophisticated attackers and improve the security posture of your organization.

What Are We Looking For?

When we threat hunt, what are we looking for exactly? Well, were trying to find hits for malicious behaviors wherever they may exist.

For example, if an attacker compromises employee credentials to a single sign-on (SSO) service (like Okta, OneLogin, etc.), they can access all authorized pages of that employee. Using atomic indicators, such as the IP from which the attacker accessed the service, we can build a story and trace back what happened. From there, we can pivot to other data sets to find related activity and answer the question: what did the attacker do once they got in?

These initial signals are a great place to start, but how can you effectively search through your data to find all of the subsequent activity?

Normalizing your Security Data

One of the big challenges with security data is normalizing and extracting indicators from logs to enable actionability in detections and hunting. Most security tools leave data normalization to the user to handle, but in Panther, all logs are automatically converted into a strict schema that enables detections with Python and queries with SQL.

For example, if we start with a highly unstructured data type like Syslog:

Panther will normalize this data into the following JSON structure:

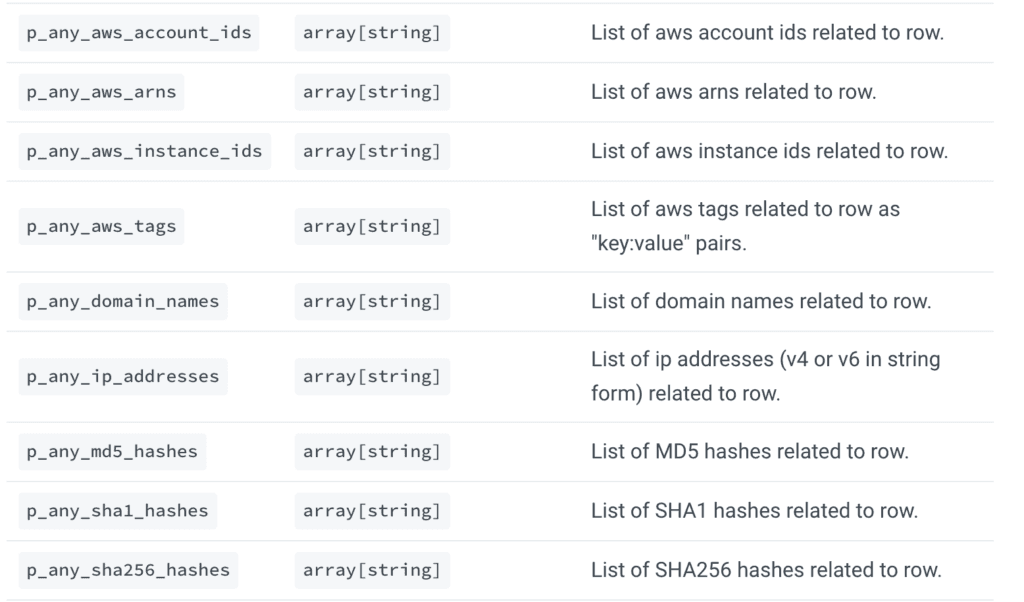

As you can see, helpful indicators like event time, domain name, and log type are extracted into p_ fields, which are the Panther Standard Fields that enable a security team to hunt for threats and correlate activity.

Panther standard fields

Lets take a richer log type as a second example, such as AWS CloudTrail, which has the following structure by default:

After normalization with Panther, the same AWS CloudTrail log now looks like this:

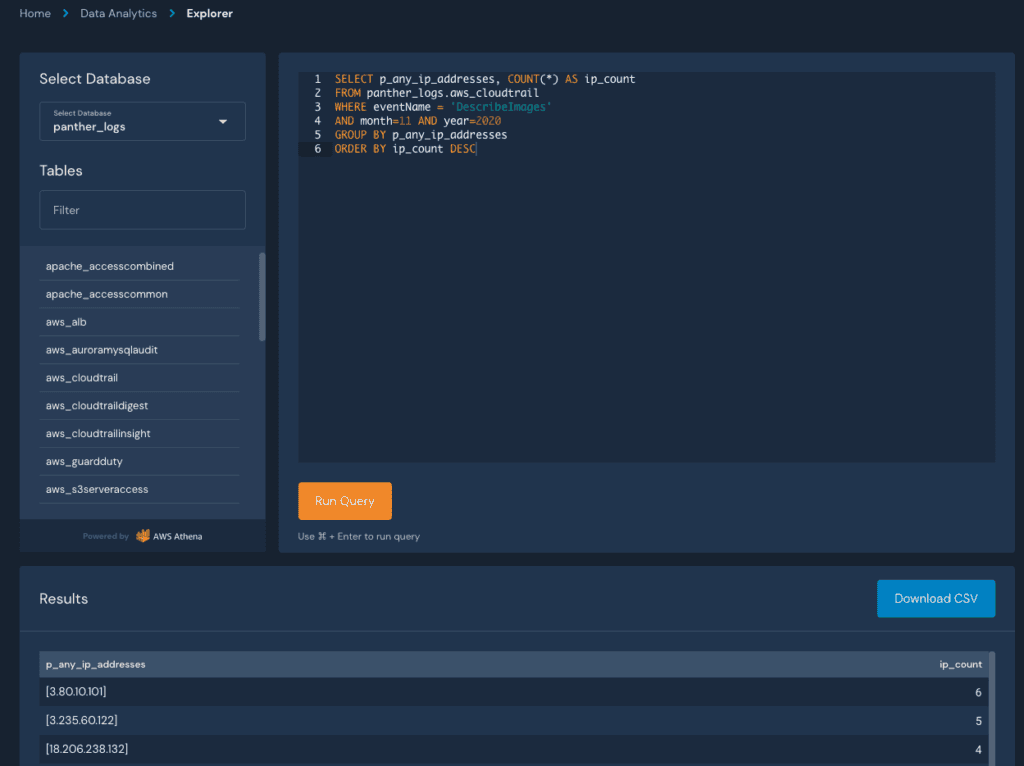

Now, this normalized data (including the p_ fields) can be searched with SQL in Panthers Data Explorer, organized into tables by their classified log type (e.g. CloudTrail, VPC Flow, Syslog) and databases by the result of processing (i.e. all logs, rule matches, or metadata):

This SQL shell provides power and flexibility in data analytics, but what if we wanted to search for hits across all of our data sources? This is where Panthers indicator search comes in!

Searching for Indicators

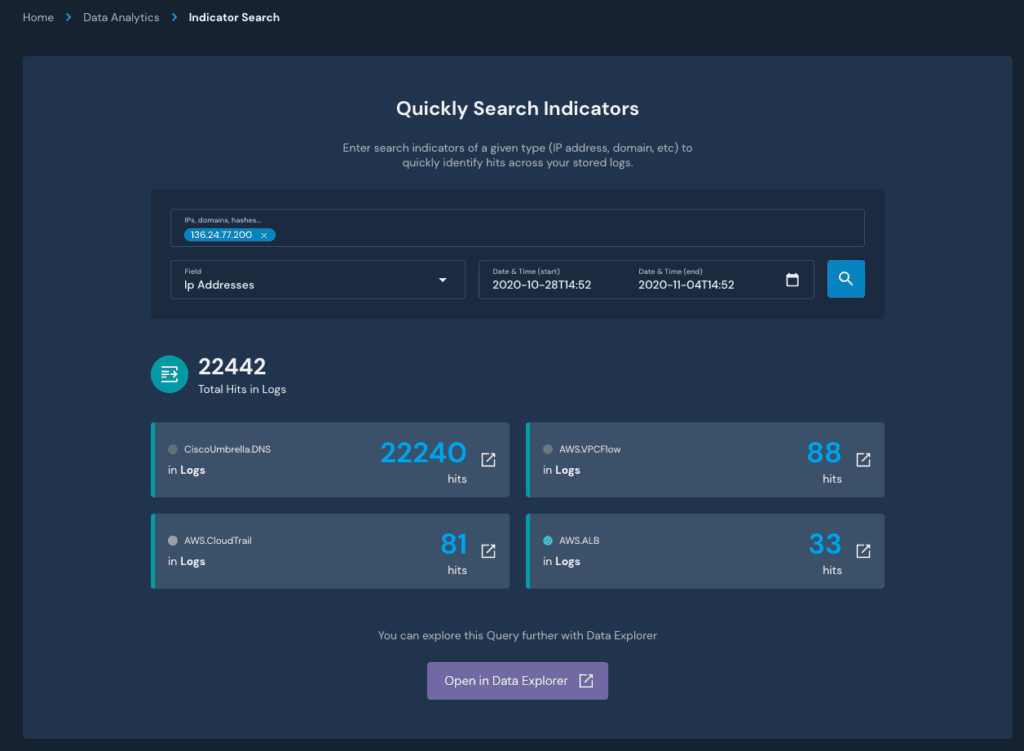

To correlate indicators across all of your normalized logs, you can use the Indicator Search. Its a great starting point for proactive hunting or triaging a generated alert.

Typical indicator fields include IPs, Domains, Usernames, or anything that can be correlated as the result of a string of related activity. Take SSH traffic, for example. When a user connects to a new host, their IP address is present in the netflow data (Layer 3 or 7 network traffic) and the SSH session (syslog). Using the Indicator Search, you can identify these links within a given timeframe and find related activity to answer questions in an investigation.

In this example, lets find associated hits for the past week from the IP extracted in the CloudTrail event above:

Searching Cloudtrail Indicators

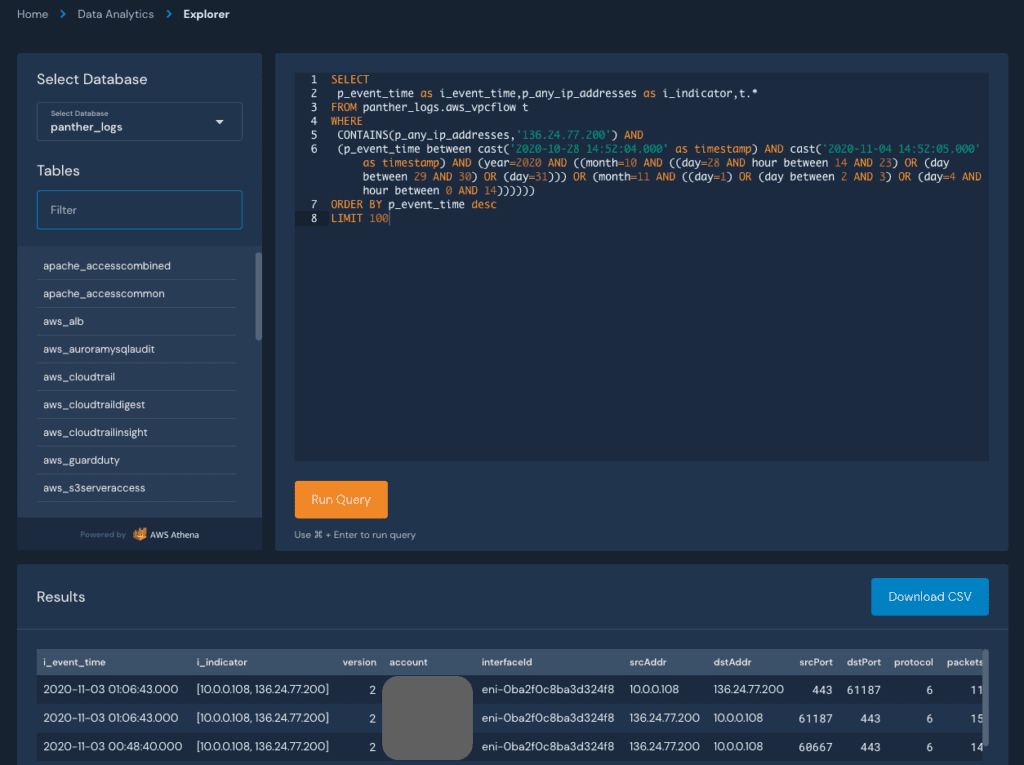

If we click any of the arrows to see the hits, well get redirected to Panthers Data Explorer with an automatically generated SQL query to get directly to the data:

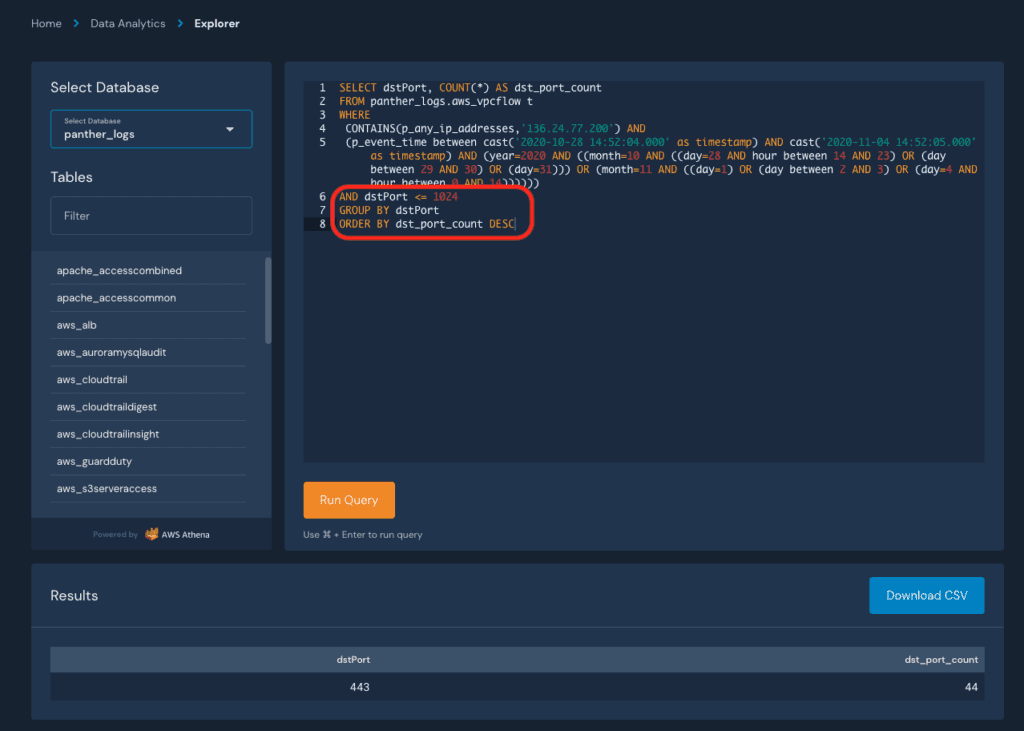

From here, we can modify the query to search for specific behaviors, such as the distinct destination ports accessed:

This pattern of detecting, searching, pivoting, and refining can be repeated until youve answered all of the pertinent questions in your investigation. Findings can also be exported as CSV to store in your incident management system.

Telling the Whole Story

In this blog, we demonstrated how you can use structured data, SQL queries, and normalized indicators to stitch together a complete picture of an incident that touched multiple systems. Two recent and notable additions in Panther to further improve this workflow are support for custom logs, which significantly increase the types of data you can send to Panther, and integrations with new SaaS log sources, like Crowdstrike, which pull data directly from APIs.

At the end of the day, any seasoned security practitioner will tell you that good security starts with good data thats why we built Panthers data infrastructure on proven big data patterns that offer better performance at scale than traditional SIEMs. And thats also why Panther integrates with Snowflake to provide security teams a best-of-breed data warehouse to power robust investigations against huge volumes of data.

See it in Action

To learn more about how your team can build a world-class security program around terabytes of centralized and normalized data, watch our on demand webinar: Threat Hunting at Scale with Panther.

Share: